by Laurence | Mar 10, 2008 | Local search

From Canada’s Financial Post, here’s an interesting summing-up of last year’s Geosign implosion, courtesy of Ahmed Farooq of iBegin.

(Alas, I had skipped over an earlier post on this topic from Peter Krasilovsky, so this was mostly news to me.)

The short version: Geosign operated a bunch of domains that existed solely to serve ads. Some of these sites included ‘real’ content as a cynical fig leaf.

Googlers know how it goes: You search for ‘XYZ’ and click on an ad (or a result) that looks promising, only to land on a site full of more XYZ-related ads — some of which lead to yet more ad sites, the AdSense version of an infinite loop.

Since advertisers pay by the click, this provides easy money for companies that are willing to waste your time. ‘Arbitrage’ is the common — rather charitable — name for the method.

Google ultimately cut off Geosign, presumably because it was hurting the value of Google’s ads, and the company fell apart.

As a strategy, arbitrage isn’t so dissimilar from search-engine marketing (SEM), or even from search-engine optimization (SEO); it’s all a matter of degree. And when your content is advertising, as it is for Yellow Pages sites, the line gets even blurrier.

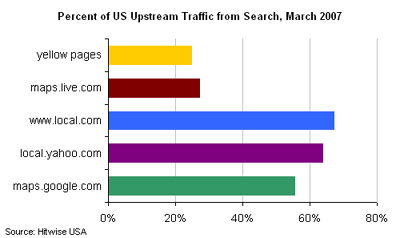

So what separates Geosign from the rest of the local universe, which also depends heavily on search-engine traffic? Witness this chart from Hitwise, recently highlighted by Mike Boland at Kelsey:

It’s arguable that Geosign is just the chart’s reductio ad absurdum. Obviously we can make distinctions, but I’d be worried if I were above, say, 35% on this chart and I weren’t Google or Yahoo.

OK, it’s definitely impressive that Local.com gets more of its traffic from search engines than does either Yahoo Local or Google Maps. Probably the same is true of Marchex, which operates domains like 20176.com.

But if Google and Yahoo want to move their own bars to the right, they can easily do so. It’ll come from the hide of Local.com, Marchex and similar companies.

And one big lesson of Geosign, scary and refreshing both, is that Google is willing to nuke a 9-digit business overnight.

by Laurence | Feb 26, 2008 | Local search

Not long ago I was talking with Gib Olander from Localeze, our main data supplier. The topic was local data, and how weird it can be: Some things look like they must be mistakes—except they’re not.

Gib’s example was a place that sells custom rims and also cellphone service. If you saw the business listed under both categories, you might figure one was wrong. But Gib can show you a photo of Cell ‘n’ Wheels that proves otherwise.

I laughed. The example wasn’t so terribly outrageous, and Localeze certainly has an interest in promoting this idea.  Yet at the same time, I happened to know of a much better illustration.

Yet at the same time, I happened to know of a much better illustration.

I get my hair cut at a barber’s shop that also sells seafood. Oysters, specifically. During the holiday season it does a roaring trade in hams, too; they’re piled into a shopping cart by the door. No one seems to mind buying dinner from a place that can get ankle-deep in human hair.

Whatever about rims and phones, I’d definitely suspect an error if my search for [oysters] near [Leesburg, VA] returned Plaza & Tuffy’s Barber Shop as the top result. But it’s the only place in Leesburg that advertises oysters on a sign:

I shot this photo right before getting a haircut. When I went inside, my barber Bobby asked why I had been taking pictures. I told him I had a friend in Chicago who didn’t believe that a barber shop also sold seafood.

“We’re still country here,” he replied. “You tell him that.”

It got me to thinking: In reviewing YellowBot last year, I included a screengrab of something I portrayed as an error—YellowBot’s tags said that a hair-removal place here in Leesburg also sells bail bonds.

Perhaps I was too hasty in calling it a mistake?

by Laurence | Sep 19, 2007 | Local search

Yellow Pages folks surely do love structure — especially when it comes to data. Here at the latest Kelsey conference, where YP folks abound, the only good datum is a structured datum.

Consider the title of yesterday’s most interesting panel:

Building a Better Database: Acquiring Content in a Dysfunctional Environment

The title is a bit grad school, but “dysfunctional” is a strong word that caught my eye. Here it mostly means “resistant to structure.”

And them’s fightin’ words in the world of Yellow Pages.

By now I’ve gone to a bunch of YP-oriented conferences. All of them featured a discussion about how to gather structured data. But I’m starting to suspect that this isn’t the most important problem to solve — and not just because these conference discussions never go anywhere.

Here’s my thinking:

In what a YPer would call a functional environment, every business location, small or large, would authorize a regularly updated master version of its “attributes” (hours, certifications, parking facilities, etc.), and would post this information in some microformat on its Web site, or supply it directly to each data vendor, or send it to an industry-wide data clearinghouse that’ll probably never exist.

In addition, lots of other data sources — licensing bodies, rating sites, whatever — would distribute structured information that’s already normalized and can be correlated perfectly to these master records.

All this data would then be collated by data vendors such as Localeze and sold to Web companies such as Google or, for that matter, Loladex.

Finally, the Web companies would build applications that use the structured data for searching by consumers (input) and display to consumers (output).

This worldview may be summarized thus:

More structured data in → Better answers out.

Or as Marchex‘s Matthew Berk (who’s a smart guy) said at the panel here: “We think local search is about structured search.”

Berk gave a very good example, which I also use when discussing Loladex: If you’re looking for a doctor, you need to know whether he takes your insurance. That’s true, without a doubt.

But here’s the problem I have:

The majority of information available about any company, and particularly about any small company, will never be structured. It’ll exist only on the general Web, where it must be searched on its own terms — that is, as unstructured text.

To me, this suggests that the most pressing data problem isn’t how to gather more structured data, but how to search unstructured data (on Web pages) and return structured answers.

I live on both sides of this equation, by the way. My wife runs a small cookie bakery, and I’m in charge of distributing her data to online sources.

Because of my background, I’m more informed and motivated than most small business owners. And yet, to be honest, just keeping her Web site up-to-date is a chore. On Yelp right now, I’m sorry to say, her hours are incorrect. I should update it, but I just haven’t.

Accuracy on our own Web site is always my #1 priority, because that’s our official voice. Also it’s where most people land when they search for “Lola Cookies.”

Keeping Yahoo Local accurate is on my list, too, but it’s lower down. Ditto Google and YellowPages.com and the other big sites.

I never think about the data vendors one layer back, like InfoUSA, unless they happen to call the store. (Which InfoUSA does, to its credit.)

Meanwhile, plenty of interesting and searchable information about the bakery exists in other places on the Web, in formats that aren’t even addressed by the concept of “attributes.”

A TV broadcast from the bakery aired live on the local morning news recently, for instance. If you watched the show, you might search for us with a term like “fox 5 cookies virginia.” Where does that fit in the world of structured data?

I raised this general issue at yesterday’s panel. What were the panelists doing about this wealth of unstructured Web data, which right now is the dark matter of the local-search universe?

The answer I got was, basically, “Not much.”

Most panelists said they do only highly targeted crawls, focusing on sites that have structured data that can “extend or validate” their own data, in the words of Localeze’s Jeff Beard. An example might be the site of a professional group such as the American Optometric Association.

No panelist was ready to start indexing the sites of individual businesses, or locally focused blogs, or any other sites that are unstructured but potentially rich in content.

The only (mild) exception was Erron Silverstein of YellowBot, who also said his company limits itself to targeted crawls — but included local media, such as newspapers, among his targets.

A few players are indexing the broader Web and then associating pages with specific businesses (which is the important part). Most notable are Google and Yahoo, who do it for their local search products.

Of course, they’re already indexing the entire Web. It’s less of a stretch for them.

Google and Yahoo also buy structured data from InfoUSA, Localeze and others, so it’s not like such data is obsolete. But they’re getting the same info directly from some businesses, and those updates are likely more timely, more accurate, and more complete.

Meanwhile, their Web indices are opening up a realm of data that traditional vendors like Acxiom — represented by Jon Cohn on yesterday’s panel — simply don’t care to address.

I suspect that, sooner than you’d imagine, Google and Yahoo will be buying structured data not so that users can search it directly, but for two less-flattering reasons:

- To help find Web pages they can associate with each business

- To fill ever-smaller gaps in the coverage that results from #1

Matthew Berk of Marchex argued that a good local search must be structured to “help someone walk down the decision trail” by using filters to narrow their search progressively:

I need a orthopedist in Boston … in the Back Bay … who accepts United Healthcare.

I think users are more likely to learn that they can go to Google and type “orthopedist back bay united healthcare” — particularly if it produces a good top result the first time they try.

The burden of local search, it seems to me, is to do something that Google can’t match with an unstructured Web search.

In any case, the search portals will ultimately use their indexed Web pages to extract and cross-check structured data directly. Over time — probably just a couple of years — such automated processes will yield data that’s more current and detailed than anything that’s produced by scanning phone books or calling stores.

The resulting search functionality, integrating both structured and unstructured data, will be sold to other companies as a Web service, and data vendors such as InfoUSA will become irrelevant to local search.

Now that would be a dysfunctional environment for many of the Kelsey attendees.

I’m not sure exactly how companies like InfoUSA and Acxiom should tackle the unstructured Web. It’ll demand a new way of thinking, and probably a new way of selling.

But I’m certain that they ignore unstructured data at their peril.

by Laurence | Jul 16, 2007 | Competitors, Local search

I generally don’t read Wired magazine unless I’m flying, so I haven’t seen much of it lately. But yesterday, in Dulles airport on the way to California, I picked up the July issue & noted this cover line:

Google Maps and the Rise of the Hyperlocal Web

Turns out there were two loosely related stories inside: A sloppy kiss for Google Maps as a platform for the coming geoweb, and a “dispatch from the hyperlocal future” from cyberpunk author & pundit Bruce Sterling.

I agree that Google Maps — Google generally, really — is setting some of the terms of debate in local, and that KML, the emerging standard it acquired via its purchase of Keyhole, is a Good Thing.

Still, the story went a bit far in its “game over” portrayal of Google Maps as the epicenter of a movement that’s (according to me, anyway) far too young to have a leader, let alone a winner.

The story’s broader points were well taken, however, and the overall thesis — that people with tools, not companies with algorithms, will power this geostuff — captured something real. As always, I don’t like the facile equation of local=maps, but what can you do?

All of this dovetailed nicely with another July feature, a nice profile of Luis von Ahn — a MacArthur winner with a human-centric outlook on computing. The most interesting article in the issue, by far, and obviously applicable to local.

Bruce Sterling’s riff on hyperlocal, alas, was speculative quasifiction, and darn near unreadable. I’d like to see Wired tackle what “hyperlocal” actually means, but this was just a parade of buzzwords, mostly made up.

by Laurence | Jun 18, 2007 | Competitors, Local search, Loladex

Palore is a simple browser tool that recognizes when you’re using a local-search site and artfully annotates your results with little informational icons.

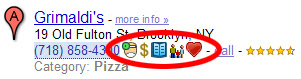

An annotated result on Google Maps looks like this (I’ve circled the Palore icons, which wouldn’t normally appear on Google Maps):

Mousing over an icon gives you a pop-up with more info. The little doctor icon, for instance, shows health-violation data. You might also see reviews, booking links, and more.

Mousing over an icon gives you a pop-up with more info. The little doctor icon, for instance, shows health-violation data. You might also see reviews, booking links, and more.

This is extremely useful: In essence, Palore is showing Google — and everyone else — how to address some of the weaknesses of a map-dominated interface. (My somewhat outdated post on Google’s UI is here.)

Palore is supposedly in closed beta, by the way, but you can download some specialized versions (kosher, Zagat, “green”) from its home page.

I read about Palore a while ago and thought it was a great idea — which is another way of saying that it’s kinda like Loladex. Apparently it has done very well in Israel, where it started.

When I finally got around to downloading it today, however, I found that the specialized versions don’t appear to include the most important feature: The ability to pick & choose which icons get displayed, and how they get displayed — to switch off everything except the health-violation icon, say, or to put the menu icon first.

Maybe this functionality is in the non-specialized version? Certainly it’s implied by Palore’s home-page text:

- “Use Palore to see the things you care about when looking for restaurants and other local businesses online”

- “Choose from dozens of information-icons that will instantly appear in any search site you use”

Yup: That’s what I want! So why can’t I do it?

Assuming the “real” beta works the way I’d like, or at least that it ultimately will, here are my nominations for what else could be better about Palore — which I really do admire, by the way:

- I’m sure Palore hears this from everyone: No one wants to download a browser add-on. It’s pretty painless, but it’s still a psychological hassle & it limits their potential audience. Airfare metasearcher Sidestep went this route for years until, in essence, it was forced to change its focus by fast-growing competitors such as Kayak. Sidestep still offers a plug-in, as well as a Google toolbar with integrated Sidestep functionality, but both options are buried in its destination Web site — as they should be. Palore is building its model on a behavior that its users will adopt only grudgingly.

- Very much related: Palore adds information to other sites’ search results, but it doesn’t allow me to adjust the results themselves. If Palore knows that I care about vegetarian restaurants, for instance, it knows that Google Maps’ #9 result is much more relevant than the #1 result. But as the user, I’ll still need to scroll down to realize this fact. Worse, the most relevant result may be on the third (or thirtieth) page of results.

- Both of the above complaints amount to the same thing, I guess: Palore would be better off building a destination site. The local-search space is still wide-open, and they should have the courage of their convictions. Maybe they figure they’ll get more traffic by piggybacking on established sites, but I bet they’re wrong.

- Palore doesn’t seem to be exposing an API that would allow anyone to power an icon without their mediation; instead, you’re asked to contact them about “partnering opportunities.” No matter how streamlined their process is, it’s more limiting than do-it-yourself. Not very 2.0.

- Palore seems overly focused on restaurants. (They address this in their blog.)

- A minor quibble: Palore doesn’t work on Yahoo Maps, because Yahoo Maps is built in Flash. That’s a big traffic source, and could really benefit from Palore icons. But of course I’ve already recommended that they move away from this model, so I can’t complain much. And I don’t think it’s addressable, anyway.

Having said all this, I must add that I hope Palore doesn’t read this post — or, if it does, that it doesn’t take my advice. If it did, I’d have a scary competitor.

Yet at the same time, I happened to know of a much better illustration.

Yet at the same time, I happened to know of a much better illustration.

Mousing over an icon gives you a pop-up with more info. The little doctor icon, for instance, shows health-violation data. You might also see reviews, booking links, and more.

Mousing over an icon gives you a pop-up with more info. The little doctor icon, for instance, shows health-violation data. You might also see reviews, booking links, and more.